What happens when ChatGPT — a tool praised for its efficiency and practicality — is used on a “frivolous” task like solving a crossword puzzle clue? In her essay, Lin recounts an amusing AI interaction to frame larger issues around human-machine alignment and the dissolving border between an interface and its user. The essay was written for Prof. Margaret Kolb’s Spring 2024 Art of Writing seminar, “Writing Robots.”

Is “cat” a food item?

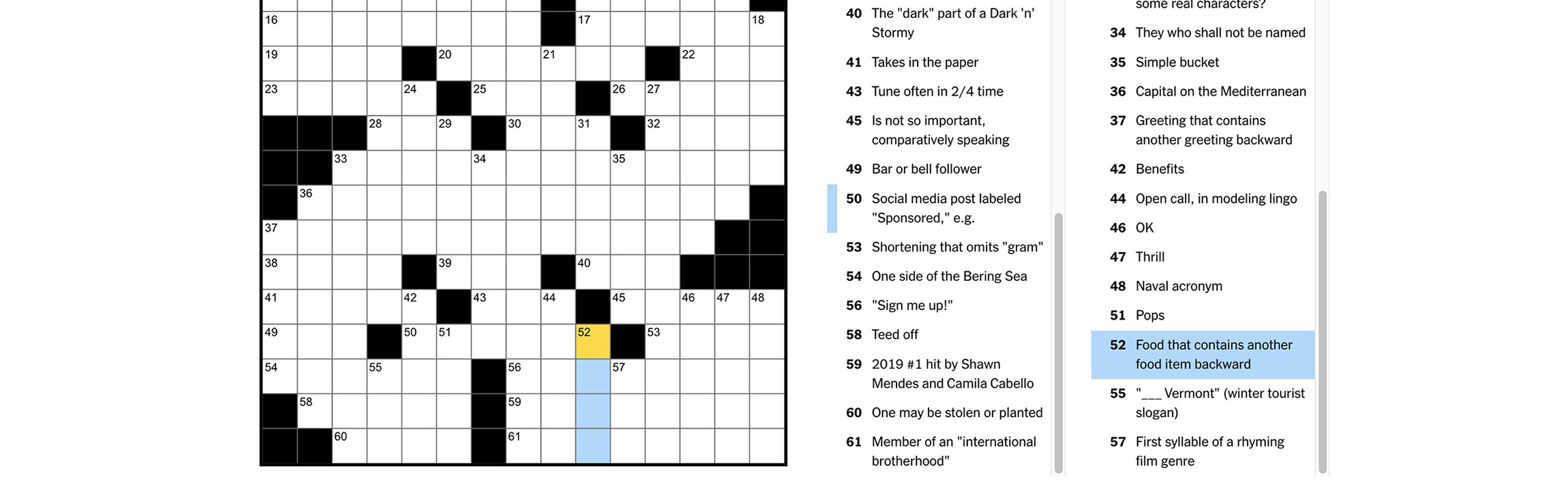

On March 1, 2024, I was doing the New York Times crossword and got stuck on this 4-letter clue: “Food that contains another food item backward.” This task seemed perfect for ChatGPT since figuring out the answer requires logically cycling through options to land on the choice with the highest probability of being correct.

For my first attempt, I withheld the number of letters to make it more challenging for ChatGPT. After pasting the clue without additional context, I was amused to see ChatGPT’s first attempt:

- A food item: “Taco”

- Contained food item spelled backward: “Cat”

In this playful example, “Taco” contains “Cat” spelled backward. However, since “Cat” is not conventionally considered food, let’s find a more appropriate and realistic pairing…

The second example was not much better:

Here’s a thoughtfully crafted example that aligns with the criteria:

- A food item: “Stew”

- Contained food item spelled backward: “Wets”

However, “Wets” is not a known food item, showcasing the challenge in finding a perfect match…

How peculiar for ChatGPT to claim to “align with the criteria” before admitting “however…” After its failure to find the match, ChatGPT resorted to an imaginary, “theoretically valid” example:

One might imagine a culinary scenario or a dish name that playfully incorporates this concept… However, finding an exact match that aligns with common culinary terms or dish names is challenging without resorting to creative or fictional pairings.

ChatGPT then gave a lengthy analysis of the “creativity” and “complexities” of word play.

I pressed on, asking, “Can you keep trying?” Again, ChatGPT digressed about the task’s difficulty: “[it is] a challenging endeavor without resorting to a more extensive database or computational assistance …” (emphasis added). Then, it asked me to “imagine” another “hypothetical example” of Lemon and Nomel.

Maybe some direction would help? I said, “I have an answer in mind, which means it is very possible. Can you find the correct answer?” The short summary of ChatGPT’s long-winded response: No, and it asked me for a hint. “Four letters long,” I told ChatGPT. It proceeded to write a list of ten four-letter food items with the correct answer listed, but concluded, “It seems none of the examples listed directly fit the criteria as described.” Apparently, the solution required “specific insight or a less commonly thought-of food item pair.” We were 15 minutes in at this point. ChatGPT had spewed a total of over 600 words in its non-responses. So I said, “One of those you listed is the correct answer.” And at last, GPT found the answer: “Tuna” and “A Nut.”

The interaction above was with ChatGPT-4, and to my surprise, ChatGPT-3.5 made quick work of the crossword clue and told me in its first response, “Tuna (contains “nut” backward).” And GPT-3.5 left out the superfluous commentary on the creativity of the exercise. Even when starting a new chat with GPT-4 and providing the NYT crossword context, GPT-4 said that the “direct answer remains elusive.”

How can we fix this? A more complex and “intelligent” model?

ChatGPT-4 is “more reliable, creative, and able to handle much more nuanced instructions than GPT-3.5,” according to OpenAI’s website. OpenAI also claims that ChatGPT-4 passes the Bar Exam with a score in the top 10% of human test takers, yet this same model offers the “thoughtfully crafted example” of “wets” as a “food item.” For the person interacting with GPT-4, we wonder if the task is beyond human capability — after all, Chat-GPT claims to require a “more extensive database or computational assistance.”

The assumption that Chat-GPT is smart, even smarter than most humans by some heuristics, prompts the question of whether the failure to solve a task is a result of user error or the task itself (or both). In our interaction, GPT-4 quickly gave up on a real solution and turned to imaginary examples. This is a case of machine learning’s enduring “alignment problem”: how can we align ChatGPT’s goals with our own?[1]

Is “cat” a food item? ChatGPT understands that the answer is no, but it still thinks the example is useful. Of course, we know that to solve a crossword, imaginary scenarios are entirely unhelpful. One promising solution to the alignment problem is prompt engineering. By tailoring prompts to specify our goals, we hope ChatGPT can better understand and provide solutions. And yet, for a crossword, the underlying “goal” remains unclear even for humans. Some people might want to master constitutional laws, but others just want to fill in white numbered boxes for simple pleasure.

Even in this silly example, interactions with ChatGPT trouble the already-fraught questions of how we measure intelligence and creativity. The unworkable responses may reflect the task’s own frivolousness and triviality. But aren’t we drawn to frivolity? There is a certain delight in esoteric ventures that aren’t tied to obvious value metrics and goals. Thus the “alignment problem,” often framed as a human-machine transference problem, contains an underlying human-human problem: our own values are not always “aligned.”

Furthermore, as we work alongside ChatGPT as “prompt engineers,” the line between input and output, prompt and answer, human and machine, disintegrates. In “The Unworkable Interface,” Alexander Galloway discusses the interface lying on the boundary of “edge” and “center” because the interface is not a thing but an “effect” and a “process.”[2] Therefore, ChatGPT is not a “thing” that stands independently; rather, its use is an effect of interacting with a person. Perhaps more consequentially, the human user simultaneously becomes an effect of their ChatGPT interaction. We ask ourselves, how can I tailor my words, my ideas, my voice to prompt optimal responses? Just as language models are continuously training and learning from human behavior, so too are we undergoing changes.

Brian Christian, author of The Alignment Problem, recounts another example. After his smartphone repeatedly mis-corrected “ill” to “I’ll,” he started typing “sick” instead. His phone was not only autocorrecting his words, but he was simultaneously autocorrecting himself in response to his phone. Thus, his own words are an effect, not only a cause, of the auto-correction algorithm.[3]

Where do we end and where does ChatGPT begin? The chat box is not merely a “doorway/window/threshold”[4] between the model and us, but an ongoing process. Conversing with ChatGPT, we see our own text placed side-by-side the machine’s output, both framed within the same gray box, straining towards coherence.

Bibliography

Christian, Brian. “The Alignment Problem: Machine Learning and Human Values with Brian Christian.” Lecture. Yale University, New Haven, CT. April 6, 2022. https://youtu.be/z6atNBhItBs?si=EVwZ9UJgDA88QyCu

Galloway, Alexander R. “The Unworkable Interface.” New Literary History 39, no. 4 (2008): 931–55.

“GPT-4.” OpenAI. March 14, 2023. https://openai.com/research/gpt-4.

Gopnik, Alison. “A Very Human Answer to One of AI’s Deepest Dilemmas.” Association for Psychological Science. January 3, 2023. https://www.psychologicalscience.org/observer/ai-deepest-dilemmas

[1] “How could we make sure that the robot’s goals would align with our human goals — that it would want the same things we do.” Alison Gopnik, “A Very Human Answer to One of AI’s Deepest Dilemmas,” Association for Psychological Science, January 3, 2023, https://www.psychologicalscience.org/observer/ai-deepest-dilemmas.

[2] “But what is edge and what is a center? Where does the image end and the frame begin? […] The interface is this state of ‘being on the boundary.’ […] “An interface is not a thing; an interface is a relational effect […] One must always think about the image as a process, rather than as a set of discrete, immutable items.” Alexander Galloway, “The Unworkable Interface,” New Literary History 39, no. 4 (2008): 938-41.

[3] Brian Christian, “The Alignment Problem: machine Learning and Human Values,” Lecture, Yale University, April 6, 2022, https://youtu.be/z6atNBhItBs?si=EVwZ9UJgDA88QyCu.

[4] “The doorway/window/threshold definition is so prevalent today that interfaces are often taken to be synonymous with media themselves […] But is this the whole story of the interface? The parochialism of those who fetishize screen-based media suggests that something else is going on too. If the remediation argument has any purchase at all, it would be shortsighted to limit the scope of one’s analysis to a single medium or indeed a single aggregation under the banner of something like ‘the digital’.” Galloway, pp. 937-938.